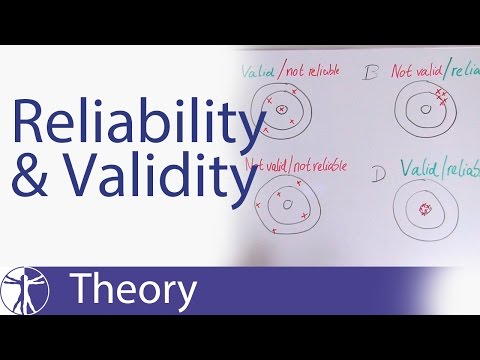

Validity refers to the extent that the instrument measures what it was designed to measure. In research, there are three ways to approach validity and they include content validity, construct validity, and criterion-related validity.

Q. When a test measures what it is supposed to measure the test is said to be?

Test validity is the extent to which a test (such as a chemical, physical, or scholastic test) accurately measures what it is supposed to measure.

Table of Contents

- Q. When a test measures what it is supposed to measure the test is said to be?

- Q. How do you measure validity of a test?

- Q. When a test measures something consistently this is known as?

- Q. How is reliability determined?

- Q. What is reliable test?

- Q. What is a good number for Cronbach’s alpha?

- Q. What is the purpose of Cronbach’s alpha?

- Q. Is Cronbach’s alpha used in qualitative research?

- Q. Why is Cronbach’s alpha negative?

- Q. What is reliability coefficient in testing?

- Q. Can the kr20 go negative?

- Q. What does a negatively discriminating item do on a classroom test?

- Q. How do you calculate KR21?

- Q. What is Kuder Richardson method?

Q. How do you measure validity of a test?

The criterion-related validity of a test is measured by the validity coefficient. It is reported as a number between 0 and 1.00 that indicates the magnitude of the relationship, “r,” between the test and a measure of job performance (criterion).

Q. When a test measures something consistently this is known as?

Reliability refers to the consistency of a measure. Psychologists consider three types of consistency: over time (test-retest reliability), across items (internal consistency), and across different researchers (inter-rater reliability).

Q. How is reliability determined?

Reliability is the degree to which an assessment tool produces stable and consistent results. Test-retest reliability is a measure of reliability obtained by administering the same test twice over a period of time to a group of individuals.

Q. What is reliable test?

Test Reliability and Validity Defined Reliability. Test reliablility refers to the degree to which a test is consistent and stable in measuring what it is intended to measure. Most simply put, a test is reliable if it is consistent within itself and across time.

Q. What is a good number for Cronbach’s alpha?

The general rule of thumb is that a Cronbach’s alpha of . 70 and above is good, . 80 and above is better, and . 90 and above is best.

Q. What is the purpose of Cronbach’s alpha?

Cronbach’s alpha is a measure used to assess the reliability, or internal consistency, of a set of scale or test items.

Q. Is Cronbach’s alpha used in qualitative research?

While quantitative studies use certain statistical techniques such as ‘Cronbach Alpha’ values for a reliability index, in qualitative studies these type of measures are not widely available, and appear to be predominantly subjective.

Q. Why is Cronbach’s alpha negative?

A negative Cronbach’s alpha indicates inconsistent coding (see assumptions) or a mixture of items measuring different dimensions, leading to negative inter-item correlations. A negative correlation indicates the need to recode the item in the opposite direction.

Q. What is reliability coefficient in testing?

: a measure of the accuracy of a test or measuring instrument obtained by measuring the same individuals twice and computing the correlation of the two sets of measures.

Q. Can the kr20 go negative?

KR-20 scores range from 0-1 (although it is possible to obtain a negative score); 0 indicates no reliability and 1 represents perfect test reliability. A KR-20 score above 0.70 is generally considered to represent a reasonable level of internal consistency reliability.

Q. What does a negatively discriminating item do on a classroom test?

The greater the positive value (the closer it is to 1.0), the stronger the relationship is between overall test performance and performance on that item. If the discrimination index is negative, that means that for some reason students who scored low on the test were more likely to get the answer correct.

Q. How do you calculate KR21?

The formula for KR21 for scale score X is K/(K-1) * (1 – U*(K-U)/(K*V)) , where K is the number of items,U is the mean of X and V is the variance of X.

Q. What is Kuder Richardson method?

In psychometrics, the Kuder–Richardson formulas, first published in 1937, are a measure of internal consistency reliability for measures with dichotomous choices. They were developed by Kuder and Richardson.