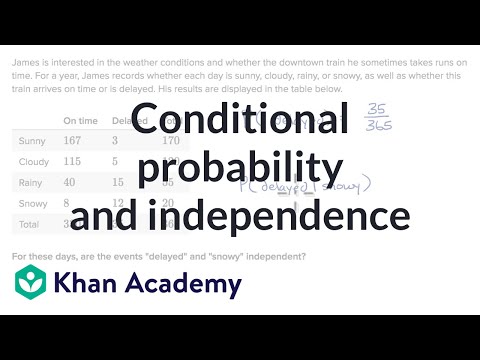

A conditional probability is the probability that an event has occurred, taking into account additional information about the result of the experiment. Two events A and B are independent if the probability P(A∩B) of their intersection A ∩ B is equal to the product P(A)·P(B) of their individual probabilities.

Q. What is the difference between joint and conditional probability?

Specifically, you learned: Joint probability is the probability of two events occurring simultaneously. Marginal probability is the probability of an event irrespective of the outcome of another variable. Conditional probability is the probability of one event occurring in the presence of a second event.

Table of Contents

- Q. What is the difference between joint and conditional probability?

- Q. How do you calculate conditional percentages?

- Q. How do you know if two probabilities are independent?

- Q. What is the opposite of conditional probability?

- Q. Why Bayes classifier is optimal?

- Q. How is Bayes risk calculated?

- Q. What is the difference between Bayes and naive Bayes?

- Q. How does Bayes optimal classifier work?

- Q. How Bayes theorem is used for classification?

Q. How do you calculate conditional percentages?

Conditional percentages are calculated for each value of the explanatory variable separately. They can be row percents, if the explanatory variable “sits” in the rows, or column percents, if the explanatory variable “sits” in the columns.

Q. How do you know if two probabilities are independent?

Events A and B are independent if the equation P(A∩B) = P(A) · P(B) holds true. You can use the equation to check if events are independent; multiply the probabilities of the two events together to see if they equal the probability of them both happening together.

Q. What is the opposite of conditional probability?

You don’t need to have any conditional probability at hand. If you have one conditional probability P(A|B), then you can get the opposite conditional probability P(B|A) directly by Bayes’ rule (my first equation). Interestingly, you can also get P(B|A) without having anything else that occurs in Bayes’ rule.

Q. Why Bayes classifier is optimal?

Since this is the most probable value among all possible target values v, the Optimal Bayes classifier maximizes the performance measure e(ˆf). As we always use Bayes classifier as a benchmark to compare the performance of all other classifiers.

Q. How is Bayes risk calculated?

The Bayes approach is an average-case analysis by considering the average risk of an estimator over all θ ∈ Θ. Concretely, we set a probability distribution (prior) π on Θ. Then, the average risk (w.r.t π) is defined as Rπ(ˆθ) = Eθ∼πRθ(ˆθ) = Eθ,Xl(θ, ˆ θ).

Q. What is the difference between Bayes and naive Bayes?

Naive Bayes assumes conditional independence, P(X|Y,Z)=P(X|Z), Whereas more general Bayes Nets (sometimes called Bayesian Belief Networks) will allow the user to specify which attributes are, in fact, conditionally independent.

Q. How does Bayes optimal classifier work?

The Bayes Optimal Classifier is a probabilistic model that makes the most probable prediction for a new example. Bayes Theorem provides a principled way for calculating conditional probabilities, called a posterior probability.

Q. How Bayes theorem is used for classification?

Bayesian classification uses Bayes theorem to predict the occurrence of any event. P(Y/X) is a conditional probability that describes the occurrence of event Y is given that X is true. P(X) and P(Y) are the probabilities of observing X and Y independently of each other. This is known as the marginal probability.