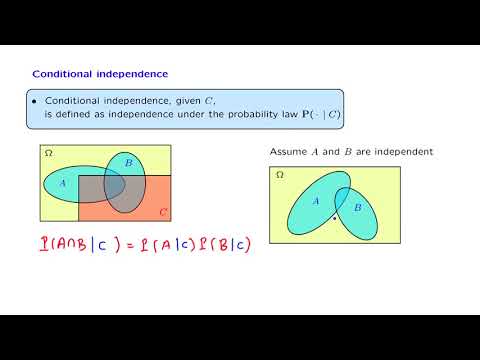

A Bayesian network is a graphical representation of conditional independence and conditional probabilities. Informally, a variable is conditionally independent of another, if your belief in the value of the latter wouldn’t influence your belief in the value of the former.

Q. How do I train Bayesian network?

How to train a Bayesian Network (BN) using expert knowledge?

Table of Contents

- Q. How do I train Bayesian network?

- Q. Is Bayesian network a machine learning?

- Q. What are Bayesian neural networks?

- Q. What is Bayesian belief network in data mining?

- Q. How is Bayes theorem useful?

- Q. Why is naive Bayes called naive?

- Q. What is the main idea of naive Bayesian classification?

- Q. What is the naive rule?

- Q. What are the assumptions of naive Bayes?

- Q. Why naive Bayes is a bad estimator?

- Q. When should I use naive Bayes?

- Q. Why is naive Bayes better than decision tree?

- Q. Is decision tree generative or discriminative?

- Q. How do you determine the best split in decision tree?

- Q. Which is better Knn or decision tree?

- Q. What are the pros and cons of decision tree analysis?

- Q. Why do we use KNN?

- First, identify which are the main variable in the problem to solve. Each variable corresponds to a node of the network.

- Second, define structure of the network, that is, the causal relationships between all the variables (nodes).

- Third, define the probability rules governing the relationships between the variables.

Q. Is Bayesian network a machine learning?

Bayesian networks (BN) and Bayesian classifiers (BC) are traditional probabilistic techniques that have been successfully used by various machine learning methods to help solving a variety of problems in many different domains.

Q. What are Bayesian neural networks?

Bayesian neural networks are stochastic neural networks with priors. with all other possible parametrizations discarded. The cost function is often defined as the log likelihood of the training set, sometimes with a regularization term to penalize parametrizations.

Q. What is Bayesian belief network in data mining?

Bayesian Belief Network Bayesian Belief Networks specify joint conditional probability distributions. They are also known as Belief Networks, Bayesian Networks, or Probabilistic Networks. A Belief Network allows class conditional independencies to be defined between subsets of variables.

Q. How is Bayes theorem useful?

As an example, Bayes’ theorem can be used to determine the accuracy of medical test results by taking into consideration how likely any given person is to have a disease and the general accuracy of the test. Posterior probability is calculated by updating the prior probability by using Bayes’ theorem.

Q. Why is naive Bayes called naive?

Naive Bayes is called naive because it assumes that each input variable is independent. This is a strong assumption and unrealistic for real data; however, the technique is very effective on a large range of complex problems.

Q. What is the main idea of naive Bayesian classification?

A naive Bayes classifier assumes that the presence (or absence) of a particular feature of a class is unrelated to the presence (or absence) of any other feature, given the class variable. Basically, it’s “naive” because it makes assumptions that may or may not turn out to be correct.

Q. What is the naive rule?

It is a classification technique based on Bayes’ Theorem with an assumption of independence among predictors. In simple terms, a Naive Bayes classifier assumes that the presence of a particular feature in a class is unrelated to the presence of any other feature.

Q. What are the assumptions of naive Bayes?

Naive Bayes is so called because the independence assumptions we have just made are indeed very naive for a model of natural language. The conditional independence assumption states that features are independent of each other given the class.

Q. Why naive Bayes is a bad estimator?

On the other side naive Bayes is also known as a bad estimator, so the probability outputs are not to be taken too seriously. Another limitation of Naive Bayes is the assumption of independent predictors. In real life, it is almost impossible that we get a set of predictors which are completely independent.

Q. When should I use naive Bayes?

Naive Bayes is the most straightforward and fast classification algorithm, which is suitable for a large chunk of data. Naive Bayes classifier is successfully used in various applications such as spam filtering, text classification, sentiment analysis, and recommender systems.

Q. Why is naive Bayes better than decision tree?

Naive bayes will answer as a continuous classifier. Naive bayes does quite well when the training data doesn’t contain all possibilities so it can be very good with low amounts of data. Decision trees work better with lots of data compared to Naive Bayes.

Q. Is decision tree generative or discriminative?

SVMs and decision trees are discriminative models because they learn explicit boundaties between classes. SVM is a maximal margin classifier, meaning that it learns a decision boundary that maximizes the distance between samples of the two classes, given a kernel.

Q. How do you determine the best split in decision tree?

Steps to split a decision tree using Information Gain:

- For each split, individually calculate the entropy of each child node.

- Calculate the entropy of each split as the weighted average entropy of child nodes.

- Select the split with the lowest entropy or highest information gain.

Q. Which is better Knn or decision tree?

Decision tree vs KNN : Both are non-parametric methods. Decision tree supports automatic feature interaction, whereas KNN cant. Decision tree is faster due to KNN’s expensive real time execution.

Q. What are the pros and cons of decision tree analysis?

Decision tree learning pros and cons

- Easy to understand and interpret, perfect for visual representation.

- Can work with numerical and categorical features.

- Requires little data preprocessing: no need for one-hot encoding, dummy variables, and so on.

- Non-parametric model: no assumptions about the shape of data.

- Fast for inference.

Q. Why do we use KNN?

K-Nearest Neighbors algorithm (or KNN) is one of the most used learning algorithms due to its simplicity. So what is it? KNN is a lazy learning, non-parametric algorithm. It uses data with several classes to predict the classification of the new sample point.